Taking stock

We have a stable platform, which can be described as follows, as we go up the stack:

- Hardware (Pis, SD cards, network connectivity)

- Operating System images – made with Packer and flashed to the Pis

- Base configuration – O.S. level configuration of packages, users. Provided as

- Service configurations – Ansible role for each of the platform layers (Consul, Nomad, etc.)

At this layer, we can either Terraform the service for something higher-up, or consume the service directly, for example using Nomad to deploy a workload.

Platform

Now, we have something approaching a platform, something with we can ‘code against. By this, I mean that I can write some declaration of something I want to consume the resources that my platform offers, which can then be executed. The platform layers that we have in place now are Consul, and on top of that, Nomad. This has been layered with the use of Ansible roles and playbooks which converge the bare metal to a desired state, idempotently. However, it does not yet do anything that one may consider useful – in order for us to get to that stage, we need to define some services.

The first of these services will be a workload orchestrator, Nomad.

A word about workloads

Before going into the details of how I deployed Nomad, I wanted to set out a few thoguhts regarding the workload orchestrator. When I first started buying the Pis, I didn’t have anything in mind, since they were just a small group of fairly under-powered chips, more a novelty than anything I considered useful. I could easily get into them and do things manually, when there were only two or three of them, but as their number increased and especially when I bought the compute Pis, I started to see the benefit that some form of orchestration could bring.

I had started playing around with k3s, which still seems like a very good choice for my little setup. Even in hindsight that might have been a good choice, since K8s is something we used daily at $WORK. It would have made a good gymnasium to build skills in, which I could then put to use in the real world. Eventually I saw it came down to a choice between the Rancher ecosystem and Hashicorp’s.

I admit to spending a few weeks agonising over this choice. In my relative ignorance, I wanted to the “right” thing. In the end it was a simple matter of liking the Hashicorp ecosystem better that convinced me.

I therefore chose Nomad as the workload orchestrator, deferring the choice of storage system for later.

Job 1

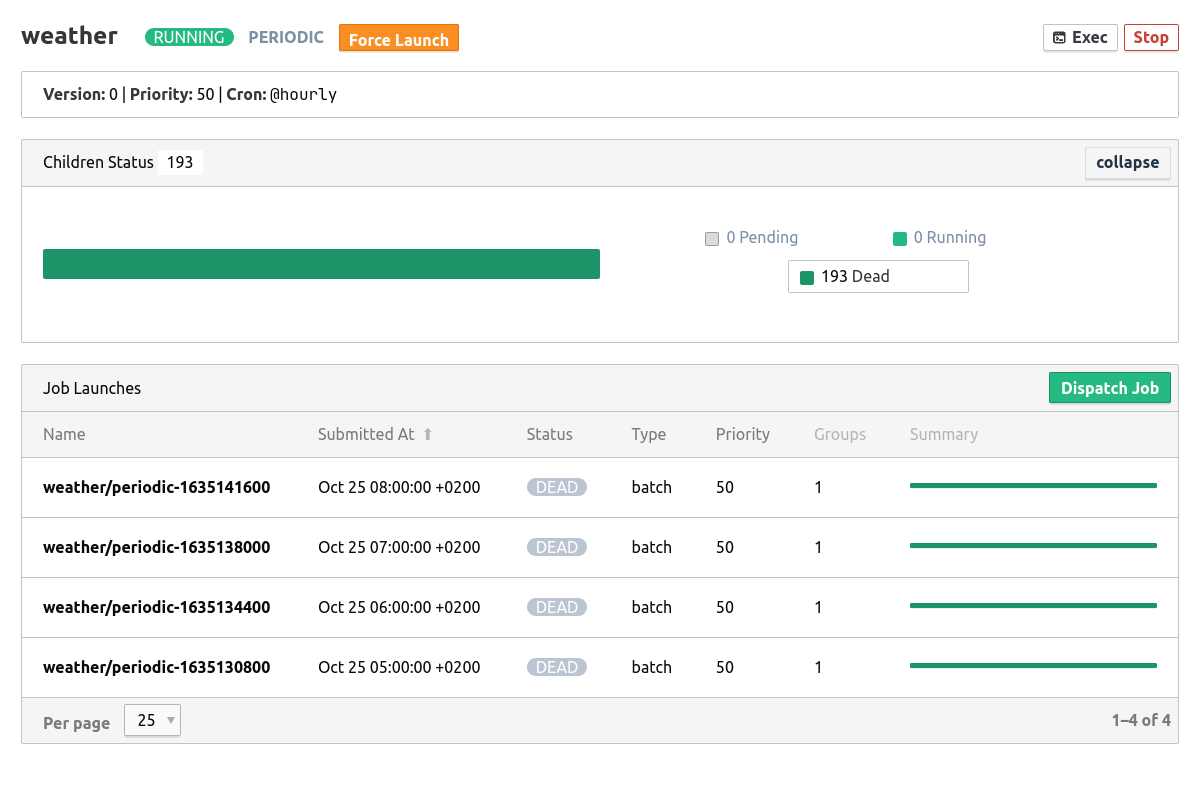

For the first workload, I wanted to take something that was already running and which was actually useful to me, rather than a trivial “hello-world” example. In this case, it was an existing python script on one of the pis, which had a ink-hat attached. The python script was one of their examples, which showed the weather at a certain location.

The script was already placed on the machine, but there was no scheduling or automatic execution in place. I wanted the script to run on the hour to display hourly updates on my pi – the perfect use case for something nontrivial to start with, since it had to use parts of the orchestrator correctly to select the desired piece of hardware.

Voilà

The job description needed to have a few nontrivial parts:

The type should be periodic:

1

2

3

4

5

periodic {

cron = "@hourly"

prohibit_overlap = true

time_zone = "Europe/Rome"

}

The driver should be raw_exec (see later for configuring this on the agent):

1

2

3

4

5

6

7

8

9

10

11

12

13

task "weather" {

driver = "raw_exec"

config {

command = "python3"

args = ["<remote path to script>"]

}

resources {

cpu = 50

memory = 250

}

}

However, most importantly, it needed to select the correct node:

1

2

3

4

constraint {

attribute = "${attr.unique.hostname}"

value = "inky.station"

}

I chose the hostname attribute, which admittedly isn’t the most reliable attribute since it’s issued by the access point, but allowed me to get up and running fast.

And there you have it: my “datacentre” is up and running!